The Scientific Method in Political Science

These notes are a combination of notes from Matt A and Estelle H. Enjoy.

Topic One: What is the scientific method?

Topic One: What is the scientific method?

- Overview

- Science as a body of knowledge versus science as a method of obtaining knowledge

- The defining characteristics of the scientific method

- The scientific method and common sense

The nature of scientific knowledge claims

Four Characteristics of the Scientific Method:

What are the hallmarks of the scientific method?

Empiricism: require systematic observation in order to verify conclusions, tested against our experience

Intersubjectivity require systematic observation in order to verify conclusions, tested against our experience

- Explanation: the goal of the scientific method. Generalized understanding by discovering patterns of internal relationships among phenomena. How variations are related.

- Determinism: a working assumption of scientific method. Assumption that behaviour has causes, recurring regularities & patterns. Causal influence. Must recognize that this assumption is not always warranted.

- Empiricism requires that every knowledge claim be based upon systematic observation.

Assumptions:

Our senses (what we can actually see, touch, hear…) can give us the most accurate and reliable information about what is happening around us. Info gained through senses is the best way to guard against subjective bias, distortion.

Obtaining information systematically through our senses helps to guard against bias.

What is ‘Intersubjectivity’ and why is it so important?

Empiricism is no guarantee of objectivity.

It is safer to work on the assumption that complete objectivity is impossible. Because we are humans studying human behaviour, therefore values may influence research.

Intersubjectivity provides the essential safeguard against bias by requiring that our knowledge claims be:

- Transmissible

- The steps followed to arrive at our conclusions must be spelled out in sufficient detail that another researcher could repeat our research. Public, detailed

- Replicable

- If that researcher does repeat our research, she will come up with similar results.

In practice, research is rarely duplicated: funding, professional incentives (tenure, difficult to publish)

Transmissibility and replicability enable others to evaluate our research and to determine whether our value commitments and preconceptions have affected our conclusions.

Explanation

The goal of the scientific method is explanation.A political phenomenon is explained by showing how it is related to something else

If we wanted to explain why some regimes are less stable than others, we might relate variation in political instability to variation in economic circumstances:

- The higher the rate of inflation, the greater the political instability.

If we wanted to explain why some citizens are more involved in politics than others, we might relate variation in political involvement to variation in citizens’ material circumstances:

- The more affluent citizens are, the more politically involved they will be.

Empirical research involves a search for recurring patterns in the way that phenomena are related to one another.

The aim is to generalize beyond a particular act or time or place—to see the particular as an example of some more general tendency.

Determinism

The search for these recurring regularities necessarily entails the assumption of determinism i.e. the assumption that there are recurring regularities in political behaviour.

Determinism is only an assumption. It cannot be ‘proved’.

The assumption of determinism is valid to the extent that research proceeding from this assumption produces knowledge claims that withstand rigorous empirical testing.

The scientific method versus common sense

In a sense, the scientific method is simply a more sophisticated version of the way we go about making sense of the world around us (systematic, conscious, planned, delibareate)

—except:

- In every day life, we often observe accurately—BUT users of the scientific method make systematic observations and establish criteria of relevance in advance. Using the scientific method.

- We sometimes jump to conclusions on the basis of a handful of observations—BUT users of the scientific method avoid over-generalizing (premature generalization) by committing themselves in advance to a certain number of observations.

- Once we’ve reached a conclusion, we tend to overlook contradictory evidence—BUT users of the scientific method avoid such selective observation by testing for plausible alternative interpretations. Commit themselves in advance to do so.

- When confronted with contradictory evidence, we tend to explain it away by making some additional assumptions—so do users of the scientific method BUT they make further observations in order to test the revised explanation. Can modify theory, provided new observations are gathered for the modified hypothesis.

The nature of scientific knowledge claims

Knowledge claims based on the scientific method are never regarded as ‘true’ or ‘proven’, no matter how many times they have been tested.

To be considered ‘scientific’, a knowledge claim must be testable—and if it is testable, it must always be considered potentially falsifiable.

We can never test all the possible empirical implications of our knowledge claims. It is always possible that one day another researcher will turn up disconfirming evidence.

Topic 2: Concept Formation

Overview:

- Role of Concepts in the Scientific Method

- What are Concepts?

- Nominal vs. Operational Definitions

- Four Requirements of a Nominal Definition

- Classification, Comparison and Quantification

Criteria for Evaluating Concepts

Role of concepts in the scientific method

Concept formation is the first step toward treating phenomena, not as unique and specific, but as instances of a more general class of phenomena. Starting point of scientific study. To describe it, create a concept.

-w/out concepts, no amount of description will lead to explanation

-seeing specific as an instance of something more general

Concepts serve two key functions:

- tools for data-gathering (‘data containers’): concept is basically a descriptive word. Refers to something that is observable (directly or indirectly). Can specify attributes that indicate the presence of a concept like power.

- essential building-blocks of theories: a set of interrelated propositions. Propositions tie concepts together by showing how they’re related.

What are Concepts? (Part 1)

- A concept is a universal descriptive word that refers directly or indirectly to something that is observable. (descriptive words can be universal or particular: we’re interested in universal words that refer to classes on phenomena). Empirical research is concerned with particular and specific, but only as they are seen as examples of something else.

- Universal versus particular descriptive words:

- Universal descriptive words refer to a class of phenomena.

- Particular descriptive words refer to a particular instance of that class. Collection of particulars (data) tells us nothing unless we have a way of sorting it.

- Conceptualization enables us to see the particular as an example of something more general.

- Conceptualization involves a process of generalization and abstraction. It is a creative act. Often begins with perception that seemingly disparate phenomena have something in common.

-involves replacing proper names (people, places) with concepts. Can then draw on a broader array of existing theory, research that would be more interesting.

- Generalization—in classifying phenomena according to the properties that they have in common, we are necessarily ignoring those properties that are not shared. Too many exceptions, look for similarities in exceptions that might show problem with theory.

-form concept -> generalize. But generalizing means losing detail. Tradeoff btwn generality & how many exceptions can be tolerated before theory is invalidated.

- Abstraction—a concept is an abstraction that represents a class of phenomena by labeling them. Concepts do not actually exist—they are simply labels.

-abstract concepts grasp a generic similarity(like trees)

-a concept allows us to delineate aspects that are relevant to our research. A concept is an abstraction that represents a certain phenomenon: implies that concepts do not exist, and are only labels that we attach to the phenomenon. Are defined, given meaning.

-definition starts with a word (democracy, political culture)

Real definitions: don’t enter directly into empirical research

Nominal vs. Operational Definitions

- Every concept must be given both a nominal definition and an operational definition.

- A nominal definition describes the properties of the phenomenon that the concept is supposed to represent. Literally “names,” attributes

- An operational definition identifies the specific indicators that will be used to represent the concept empirically. Indicate the extent of the presence of the concept. Literally spells out procedures/operations you have to perform to represent the concept empirically.

*When reading research, look to see how concepts are represented, look for flaws.

- The nominal definition provides a basic standard against which to judge the operational definition—do the chosen indicators really correspond to the target concept?

- A nominal definition is neither true nor false (though it may be more or less useful).

-very little agreement in poli sci on meaning & measurement. No need to define concept like age, but necessary for racism.

Four requirements of a nominal definition:

- Clarity—concepts must be clearly defined, otherwise intersubjectivity will be compromised. Explicit definition.

- Precision—concepts must be defined precisely—if concepts are to serve as ‘data containers’, it must be clear what is to be included (and what can be excluded). Nothing vague should denote distinctive characteristics/policies of what is being defined. Provides criteria of relevance when it comes to setting up operational definition.

- Non-circular—a definition should not be circular or tautologous e.g. defining ‘dependency’ as ‘a lack of autonomy’.

- Positive—the definition should state what properties the concept represents, not what properties it lacks (because it will lack many properties, besides the ones mentioned as lacking in the definition).

Classification, Comparison and Quantification

Concepts are used to describe political phenomena.

Concepts can provide a basis for:

- Classification—sorting political phenomena into classes or categories. Taking concepts and sorting into different categories. e.g. types of regimes. At the heart of all science.

-1. Exhaustive: every member of the population must fit into a category.

-2. Mutually exclusive: any case should fit into one category and one only.

Concepts can provide a basis for:

- Comparison—ordering phenomena according to whether they represent more—or less—of the property e.g. political stability. How much.

- Quantification—measuring how much of the property is present e.g. turnout to vote. Allows us to compare and to say how much more or less. Anything that can be counted allows for a quantitative concept. (few interesting quantitative concepts in empirical research)

Criteria for evaluating concepts:

How? Criteria correspond to functions (data containers and building blocks)

1 Empirical Import—it must be possible to link concepts to observable properties (otherwise concepts cannot serve as ‘data containers’). However, concepts do not all need a directly observable counterpart.

Concepts can be linked to observables in 3 ways:

- directly—if the concept has a directly observable counterpart e.g. the Australian ballot. Directly observable concepts are rare in political science.

- indirectly via an operational definition—we cannot observe ‘power’ directly, but we can observe behaviours that indicate the exercise of power. Infer presence from things that are observable (power, ideology)

- Via their relationship within a theory to concepts that are directly or indirectly observable.g. marginal utility. Such ‘theoretical concepts’ are rare in political science.

Gain empirical import b/c of relation to other part of theory.

2 Systematic (or theoretical) Import

—it must be possible to relate concepts to other concepts (otherwise concepts cannot serve as the ‘building blocks’ of theories).

Goal is explanation. Want to construct concepts while thinking of how they might be related to other concepts.

Topic Three—Theories

- Overview

- What is a theory?

- Inductive versus deductive model of theory-building

- Five criteria for evaluating competing theories

- Three functions of theories

What is a theory?

Goal = explanation. Generalize beyond the particular, see it as a part of a pattern. Treating particular as example of something more general

-explanation: step 1 form concepts: identify a property that is shared in common. Step 2 form theories: tie concepts together by stating relationships btwn them

- Normative theory versus empirical theory

- Theories tie concepts together by stating relationships between them. These statements are called ‘propositions’ if they have been derived deductively and ‘empirical generalizations’ if they have been arrived at inductively.

- A theory consists of a set of propositions (or empirical generalizations) that are all logically related to one another. Explain something by showing how it is related to something else.

- A theory explains political phenomena by showing that they are logically implied by the propositions (or empirical generalizations) that constitute the theory. Theory takes a common set of occurrences & try to define pattern. Once pattern is identified, different occurrences can be treated as though just repeated occurrences of the same pattern. Simplify.

-tradeoff btwn how far we simplify and having a useful theory.

-skeptical mindset, try to falsify theories.

Inductive versus deductive model of theory-building

Inductive model—starts with a set of observations and searches for recurring regularities in the way that phenomena are related to one another.

Deductive model—starts with a set of axioms and uses logic to derive propositions about how and why phenomena are related to one another.

Deductive theory-building

Deductive theory-building is a process of moving from abstract statements about general relationships to concrete statements about specific behaviours.

-theory. Data enters into the process at the end. Develop theory first, then collect data.

-begins with a set of axioms, want them to be defensible.

-from axioms, reason through a set of propositions all logically implied by the same set of assumptions

-proposition asserts relationship btwn 2 concepts

-theory helps us to understand phenomena by showing that it is logically implied. Tells us how phenomena are related and that they are actually related.

-problem: logic is not enough -> need empirical verification.

-theories provide a logical base for expectations, predictions

-design research, choose tools, collect data. See if predictions hold. If so, theory somewhat validated.

-expectations stated in the form of hypotheses (as many as possible)

-a hypothesis states a relationship btwn variables

-variable is an empirical counterpart of a concept, closer to the world of observation, specific.

-any one test is likely to be flawed.

-deductive theory-building is more efficient, asking less of the data.

Inductive Theory-Building

-data

-statistical analysis, try to discover patterns. Data first then use it to develop theory.

-being with a set of observations, discern pattern, and assume that this pattern will hold more generally

-relying implicitly on assumption of determinism

-end up with empirical generalization, which is a statement of relationship that has been established by repeated systematic observation

-ex) regime destabilized when inflation increased. Collect data on other countries. If it holds, then have empirical generalization

-inductive theory ties several empirical generalizations together

-no logical basis, therefore more vulnerable to few disconfirming instances

-less efficient, more complicated questions

-what is proper interplay btwn theory and research? In practice, it is a blend of induction and deduction.

Generalization: always have to test theory using observations other than those use in creating it. If data does not support theory, can go back & modify it. Provided you then go out & collect new data about modified theory.

Five criteria for evaluating competing theories

–Simplicity (or parsimony) — a simple theory has a higher degree of falsifiability because there are fewer restrictions on the conditions under which it is expected to hold. As few explanatory factors as possible. Why? Less generalizable harder to falsify when more complex.

–Internal consistency (logical soundness) — it should not be possible to derive contradictory implications from the same theory.

–Testability — we should be able to derive expectations about reality that are concrete and specific enough for us to be able to make observations and determine whether the expectations are supported. Allows us to derive expectations about which we can make observations and see if theory holds. Concrete and specific enough.

–Predictive accuracy — the expectations derived from the theory should be confirmed. Never consider a theory to be true. Instead, is it useful? Does it have predictive accuracy?

–Generality — the theory should allow us to explain a variety of political phenomena across time and space. Explains a wide variety of events/behaviours in a variety of different places. Holds as widely as possible.

Why is there inevitably tension among these five criteria?

-different criteria can come into conflict (more generality means less predictive accuracy, more predictive accuracy is less parsimonious)

-always going to be a tradeoff: ability to explain specific cases will tradeoff with ability to explain generally. (forests vs individual trees)

-in practice, you are pragmatic. Do what makes theory more useful.

-very rare to meet all criteria in poli sci

Three functions of theories (2nd way to evaluate)

-how well they perform functions they are meant to perform

Explanation — our theory should be able to explain political phenomena by showing how and why they are related to other phenomena. Part of some larger pattern, explain why phenomena that interest us vary.

Organization of knowledge — our theory should be able to explain phenomena that cannot be explained by existing generalizations and show that those generalizations are all logically implied by our theory. Explain things that other theories cannot. Should be possible to show that existing generalizations are related to theory/one another.

Derivation of new hypotheses (the ‘heuristic function’) — our theory should enable us to predict phenomena beyond those that motivated the creation of the theory.

Suggest new knowledge/generate new hypotheses. Abstract propositions should enable us to generate lots of interesting hypotheses (beyond those that motivate the study)

Topic 4: Hypotheses and Variables

OVERVIEW

- What is a variable?

- Variables versus concepts

- What is a hypothesis?

- Independent vs. dependent variables

- Formulating hypotheses

- Common errors in formulating hypotheses

- Why are hypotheses so important?

What is a Variable?

- Concepts are abstractions that represent empirical phenomena. In order to move from the conceptual-theoretical level to the empirical-observational level, we have to find variables that correspond to our abstract concepts. Highly abstract. Need empirical counter part -> variables

-empirical research always functions at 2 lvls: conceptual/theoretical and empirical/observation. Hardest part is moving from 1 to 2. Must minimize loss of meaning.

- A variable is a concept’s empirical counterpart.

- Any property that varies (i.e. takes on different values) can potentially be a variable.

- Variables are empirically observable properties that take on different values. Some variables have many possible values (e.g. income). Other variables have only two ‘values’ (e.g. sex).

-require more specificity than concepts. Enable us to take statement w/abstract concepts & translate into corresponding statement w/precise empirical reference.

-one concept may be represented by several different variables. This is desirable.

Variables vs. Concepts

Variables require more specificity than concepts.

One concept may be represented by several different variables.

What is a Hypothesis?

In order to test our theories, we have to convert our propositions into hypotheses.

A hypothesis is a conjectural statement of the relationship between two variables.

A hypothesis is logically implied by a proposition. It is more specific than a proposition and has clearer implications for testing. What we expect to observe when we make properly organized observations. Always in the form of a declarative statement. Always states relationships btwn variables.

Independent vs. Dependent Variables

Variables are classified according to the role that they play in our hypotheses

The dependent variable is the phenomenon that we want to explain.

The independent variable is the factor that is presumed to explain the dependent variable. Explanatory factor that we believe will explain variation in DV.

The dependent variable is ‘dependent’ because its values depend on the values taken by the independent variable

The independent variable is ‘independent’ because its values are independent of any other variable included in our hypothesis

Another way to think of the distinction is in terms of the antecedent (i.e. the independent variable) and the consequent (i.e. the dependent variable).

We predict from the independent variable to the dependent variable.

-the same variable can be dependent in one theory and independent in another.

Formulating Hypotheses I

Hypotheses can be arrived at either inductively (by examining a set of data for patterns) or deductively (by reasoning logically from a proposition). Which method we use depends on whether we are conducting exploratory research or explanatory research.

Hypotheses arrived at inductively are less powerful because they do not provide a logical basis for the hypothesized relationship (post hoc rationalization is no substitute for a priori theorizing).

Hypotheses can be stated in a variety of ways provided that (1) they state a relationship between two variables (2) they specify how the variables are related and (3) they carry clear implications for testing.

Like the concepts they represent, variables can classify, compare or quantify. This affects the way the hypothesis will be stated.

Formulating Hypotheses II

-When both variables are comparative or quantitative, state how the values of the DV (dependent variable) change when the IV (independent variable) changes:

-When the IV is comparative or quantitative and the DV is categorical, state which category of the DV is most likely to occur when the IV changes:

-When the IV is categorical and the DV is comparative or quantitative, state which category of the IV will result in more of the DV:

-When both the IV and the DV are categorical, state which category of the DV is most likely to occur with which category of the IV:

Common Errors in Formulating Hypotheses

Canadians tend not to trust their government.

Error #1–The statement contains only one variable. To be a hypothesis, it must be related to another variable. Not general.

To make this into a hypothesis, ask yourself whether you want to explain why some people are less trusting than others (DV) or whether you want to predict the consequences of lower trust (IV):

The younger voters are, the less likely they are to trust the government. (DV)

The less people trust the government (IV), the less likely they are to participate in politics.

Turnout to vote is related to age

Error #2 The statement fails to specify how the two variables are related—are younger people more likely to vote or less likely to vote?

The older people are, the more likely they are to vote.

Public sector workers are more likely to vote for social democratic parties.

Error #3 The hypothesis is incompletely specified (we don’t know with whom public sector workers are being compared). When the IV is categorical, the reference categories must always be made explicit.

Public sector workers are more likely to vote for social democratic parties than for neo-conservative parties.

Error #4 The hypothesis is improperly specified. This is the most common error in stating hypotheses. The comparison must always be made in terms of categories of the IV, not the DV. This is very important for hypothesis testing.

The hypothesis should state:

Public sector workers are more likely to vote for social democratic parties than private sector workers or the self-employed.

The turnout to vote should be higher among young Canadians

Error #5 This is simply a normative statement. Hypotheses must never contain words like ‘should’, ‘ought’ or ‘better than’ because value statements cannot be tested empirically.

This does not mean that empirical research is not concerned with value questions.

To turn a value question into a testable hypothesis, you could focus on factors that encourage a higher turnout or you could focus on the possible consequences of low turnout:

The higher the turnout to vote, the more responsive the government will be.

Mexico has a more stable government than Nicaragua.

Error #6 The hypothesis contains proper names. A statement that contains proper names (i.e. names of countries, names of political actors, names of political parties, etc.) cannot be a hypothesis because its scope is limited to the named entities.

To make this into a hypothesis, you must replace the proper names with a variable. Ask yourself: why does Mexico have a more stable government?

The higher the level of economic development, the more stable a government will be.

The more politically involved people are, the more likely they are to participate in politics.

Error #7 The hypothesis is true by definition because the two variables are simply different names for the same property (i.e. it is a tautology)

Decide whether you want to explain variations in political participation (DV) or to predict the consequences of variations in political participation (IV).

The more involved people are in voluntary organizations, the more likely they are to participate in politics.

*Importance of nominal definition: could be non-circular if meant emotional involvement & behavioural expectations.

Why are Hypotheses so Important?

- Hypotheses provide the indispensable bridge between theory and observation by incorporating the theory in near-testable form.

- Hypotheses are essentially predictions of the form, if A, then B, that we set up to test the relationship between A and B.

- Hypotheses enable us to derive specific empirical expectations (‘working hypotheses’) that can be tested against reality. Because they are logically implied by a proposition, they enable us to assess whether the proposition holds.

- Hypotheses direct investigation. Without hypotheses, we would not know what to observe. To be useful, observations must be for or against any POV.

- Hypotheses provide an a priori rationale for relationships. If we have hypothesized that A and B are related, we can have much more confidence in the observed relationship than if we had just happened upon it.

- Hypotheses may be affected by the researcher’s own values and predispositions, but they can be tested, and confirmed or disconfirmed, independently of any normative concerns that may have motivated them.

- Even when hypotheses are disconfirmed, they are useful since they may suggest more fruitful lines for future inquiry—and without hypotheses, we cannot tell positive from negative evidence.

-successful hypothesis: do variables covary?

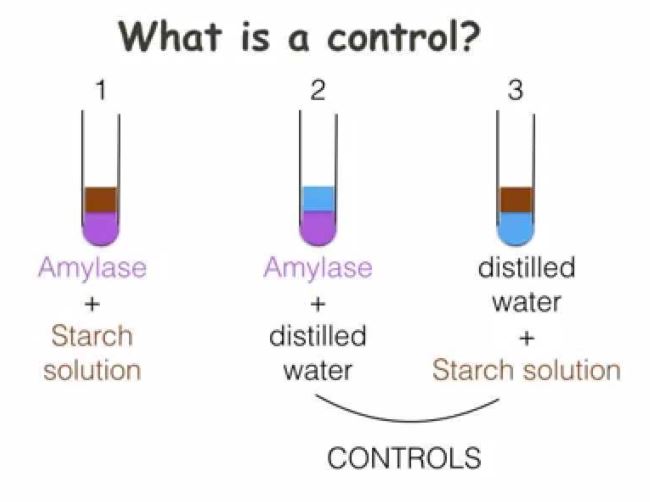

Test for other variables that might eliminate relationship. Control variables. Think about control on data collection stage.

Topic 5: Control Variables

OVERVIEW

- What are control variables?

- Sources of spuriousness

- Intervening variables

- Conditional variables

What are control variables?

Testing a hypothesis involves showing that the IV and the DV vary together (‘covary’) in a consistent, patterned way e.g. showing that people who have higher levels of education do tend to have higher levels of political interest.

It is never enough to demonstrate an empirical association between the IV and the DV. Must always go on to look at other variables that might plausibly alter or even eliminate the observed relationship.

Control variables are variables whose effects are held constant (literally, ‘controlled for’) while we examine the relationship between the IV and the DV.

Sources of Spuriousness

The mere fact that two variables are empirically associated does not mean that there is necessarily any causal connection between them

Think: pollution and literacy rates, number of firefighters and amount of fire damage, migration of storks and the birth rate in Sweden…

These are all (silly!) examples of spurious relationships. In each case, the observed relationship can be explained by the fact that the variables share a common cause

A source of spuriousness variable is a variable that causes both the IV and the DV. Remove the common cause and the observed relationship between the IV and the DV will weaken or disappear. If you overlook SS, you risk research being completely wrong.

To identify a potential (SS) source of spuriousness, ask yourself (1) whether there is any variable that might be a cause of both the IV and the DV and (2) whether that variable acts directly on the DV as well as on the IV.

If the variable only acts directly on the IV, it is not a potential source of spuriousness. It is simply an antecedent. An antecedent is not a control variable.

Sources of Spuriousness II

- To identify a potential (SS) source of spuriousness, ask yourself (1) whether these is any variable that might be a cause of both the IV and the DV and (2) whether that variable acts directly on the DV as well as on the IV.

- If the variable only acts directly on the IV, it is not a potential source of spuriousness. It is simply an antecedent. An antecedent is not a control variables.

SS à IV à DV

- Examples; The higher people’s income, the great their interest in politics.

- BUT it could be spurious: education could be a source of spuriousness:

Income à Interest in Politics

Education (spuriousness)

Education à Support for Feminism

Generation (spuriousness)

- Some variables won’t have a spurious independent variable: ethnicity religion.

Intervening Variables I

Once we have eliminated potential sources of spuriousness, we must test for plausible intervening variables

Intervening variables are variables that mediate the relationship between the IV and the DV. An intervening variable provides an explanation of why the IV affects the DV

The intervening variable corresponds to the assumed causal mechanism. The DV is related to the IV because the IV affects the intervening variable and the intervening variable, in turn, affects the DV.

IV-> Intervening->DV

To identify plausible intervening variables, ask yourself why you think the IV would have a causal impact on the DV.

-can be more than one potential rationale. Intervening variable validates causal thinking.

Intervening Variables II:

- To identify plausible intervening variables, ask yourself why you thinking the IV would have a causal impact on the DV.

- Examples:

- Women are more likely than men to favour an increase in social spending.

- GENDER à RELIANCE ON THE WELFARE STATE à FAVOUR INCREASE IN SOCIAL SPENDING

- The lower people’s income the more politically alienated they will be.

- PERSONAL INCOME à PERCEPTION OF SYSTEM RESPONSIVENESS à Political ALIENATION

Conditional variables I.

-trickiest and most common. What will happen to relation btwn IV and DV?

Once we have eliminated plausible sources of spuriousness and verified the assumed causal mechanism, we need to specify the conditions under which the hypothesized relationship holds.

Ideally, we want there to be as few conditions as possible because the aim is to come up with a generalization.

Conditional variables are variables that literally condition the relationship between the IV and the DV by affecting:

(1) the strength of the relationship between the IV and the DV (i.e. how well do values of the IV predict values of the DV?) and

(2) the form of the relationship between the IV and the DV (i.e. which values of the DV tend to be associated with which values of the IV?)

-focus is always on its effect on hypothesize relation btwn IV and DV (in every category of the conditional variable. Ex) category = religion. Christian, Muslim, Atheist. Or important, not important, somewhat)

To identify plausible (CV) conditional variables, ask yourself whether there are some sorts of people who are likely to take a particular value on the DV regardless of their value on the IV.

Note: the focus is always on how the hypothesized relationship is affected by different values of the conditional variable.

There are basically three types of variables that typically condition relationships:

(1) variables that specify the relationship in terms of interest, knowledge or concern. Example (interest, knowledge or concern):

Catholics are more likely to oppose abortion than Protestants.

If CV = attends church then: religious affiliation -> support for abortion.

If CV = not attend, then religious affiliation -> does not support

(2) variables that specify the relationship in terms of place or time. (where are they from?) Example (place or time):

The higher people’s incomes, the more likely they are to participate in politics

If CV = non-rural resident, then income -> political participation

If CV = rural resident then income does not -> political participation

(3) variables that specify the relationship in terms of social background characteristics.

Examples (Social Background Characteristics):

The more religious people are, the more likely they are to oppose abortion.

If CV = male then religiosity -> views on abortion

If CV = female then religiosity does not -> abortion

Stages in Data Analysis:

Test hypothesis –> Test for Spuriousness –> If non-spurious, test for intervening variables –> test for conditional variables.

Topic 6: Research Problems and the Research Process

OVERVIEW

- What is a research problem?

- Maximizing generality

- Why is generality important?

- Overview of the research process

- Stages in data analysis

What is a research problem?

A properly formulated research problem should take the form of a question: how is concept A related to concept B?

Examples:

How is income inequality related to regime type?

How is moral traditionalism related to gender?

How is civic engagement related to social networks?

Maximizing Generality

Aim for an abstract and comprehensive formulation rather than a narrow and specific one.

Example: you want to explain support for the Parti-Québécois.

A possible formulation of the research problem:

How is concern for the future of the French language related to support for the PQ?

A better formulation of the research problem:

How is cultural insecurity related to support for nationalist movements?

Why is Generality Important?

- Goal of the empirical method is to come up with a generalization.

- Greater contribution because findings will have implications beyond the particular puzzle that motivated the research.

Access to a more diverse theoretical and empirical literature in developing a tentative answer to the research question.

The Research Process

Find a puzzle of anomally –> Formulate the research problem. How is A related to B? –> Develop hypothesis explaining how and why A and B are related –> Identify plausible sources of spuriousness, intervening variables and conditional variables. –> Choose indicators to represent the IV, DV and control variables (‘operationalization’) –> Collect and analyze the data.

Stages in Data Analysis:

Test hypothesis –> Test for Spuriousness –> If non-spurious, test for intervening variables –> test for conditional variables.

Topic 7: From concepts to indicators

Overview:

- What is ‘operationalization’?

- What are indicators?

- Converting a proposition into a testable form

- Key properties of an operational definition

An example: operationalizing ‘socio-economic status’

What is Operationalization?

Operationalization is the process of selecting observable phenomena to represent abstract concepts.

When we operationalize a concept we literally specify the operations that have to be performed in order to establish which category of the concept is present (classificatory concepts) or the extent to which the concept is present (comparative or quantitative concepts).

The end product of this process is the specification of a set of indicators.

What are indicators?

Indicators are observable properties that indicate which category of the concept is present or the extent to which the concept is present.

In order to test our theory, we examine whether our indicators are related in the way that our theory would predict.

The predicted relationship is stated in the form of a working hypothesis.

The working hypothesis is logically implied by one of the propositions that make up our theory. Because it is logically implied by the proposition, evidence about the validity of the working hypothesis can be taken as evidence about the validity of the proposition.

Converting a Proposition into a Testable Form I

Concept -> proposition -> concept

Variable -> hypothesis -> variable

Indicator -> working hypothesis -> indicator

Converting a Proposition into a Testable Form I

Just as it is possible to represent one concept by several different variables, so it is possible—and desirable—to represent one variable by several different indicators.

Concept: variable (2 or more): Indicator (2 or more each).

Key Properties of an Operational Definition

The operational definition specifies the indicators by setting out the procedures that have to be followed in order to represent the concept empirically.

A properly framed operational definition:

-adds precision to concepts

-makes propositions publicly testable

This ensures that our knowledge claims are transmissible and makes replication possible.

An Example: Operationalizing ‘Socio-Economic Status’

The first step in representing a concept empirically is to provide a nominal definition that sets out clearly and precisely what you mean by your concept:

Socio-Economic Status: ‘a person’s relative location in a hierarchy of material advantage’.

Socio economic status: 1. Income -> earnings from employment, annual household income

- wealth: value of assets, home ownership

Topic Eight: Questionnaire Design and Interviewing

Overview:

-The function of a questionnaire

-The importance of pilot work and pre-testing

-Open-ended versus close-ended questions

-Advantages and disadvantages of close-ended questions

-Advantages and disadvantages of open-ended questions

-Ordering the questions

-Common errors in question wording

-A checklist for identifying problems in the pre-test

–important to know what makes good survey research

-simply a formal way of asking people questions: attitude, beliefs, background, opinions

-follows a highly standardized structured, thought out sequence

The Function of a Questionnaire

-The function of a questionnaire is to enable us to represent our variables empirically.

-Respondents’ coded responses to our questions serve as our indicators.

-The first step in designing a questionnaire is to identify all of the variables that we want to represent (i.e. independent variables, dependent variables, control variables).

Do not pose hypothesis directly. One question cannot operationalize two variables.

-We must always keep in mind why we are asking a given question and what we propose to do with the answers.

-A question should never pose a hypothesis directly. We test our hypotheses by examining whether people’s answers to different questions go together in the way that our hypotheses predicted.

The Importance of Pilot Work

Second step: pilot work

Careful pilot work is essential in designing a good questionnaire. Background work to prepare surveys.

Pilot work can involve:

-lengthy unstructured interviews with people typical of those we want to study

-talks with key informants

-reading widely about the topic in newspapers, magazines and on-line in order to get a sense of the range of opinion.

The Importance of Pre-testing

Third step: draft a questionnaire

Fourth step: pretest questionnaire

Once a questionnaire has been drafted, it should be pre-tested using respondents who are as similar as possible to those we plan to survey

-ideally, people you test are typical of group you want to represent.

-purposif/judgmental sampling: use knowledge of population to choose subjects

-pretest very important & often humbling

Pre-testing can help with:

- identifying flawed questions

- improving question wording

- ordering questions

- determining the length of time it takes to answer the questionnaire or interview the respondents

- assessing whether responses are affected by characteristics of the interviewer

- improving the wording of the survey introduction (who am I, what I’m doing, why I’m doing it. Doesn’t say what hypotheses are.)

Open-Ended versus Close-Ended Questions

Surveys typically include a small number of open-ended questions and a larger number of close-ended questions.

In open-ended questions, only the wording of the question is fixed. The respondent is free to answer in his or her own words. The interviewer must record the answer word-for-word, w/out abbreviations.

In close-ended questions, the wording of both the question and the possible response categories is fixed. The respondent selects one answer from a list of pre-specified alternatives. (don’t read out “other”, but should be present in case they say something else)

Advantages of Close-Ended Questions

- help to ensure comparability among respondents

- ensure that responses are relevant. Allows comparison

- leave little to the discretion of the interviewer. Respondent has control over classification of their answer.

- take relatively little interviewing time: quick to ask & answer

- easy to code, process, and analyze the responses

- give respondents a useful checklist of possibilities

- help people who are not very articulate to express an opinion

Disadvantages of Close-Ended Questions

- may prompt people to answer even though they do not have an opinion (preferable not to offer “no opinion” but have it on questionnaire. Difference btwn don’t know and no answer.

- may channel people’s thinking, producing responses that do not really reflect their opinion. Bias results.

- may overlook some important possible responses

- may result in a loss of rapport with respondents: throw in open-ended to engage people

- misunderstanding (if using terms that could be difficult, provide definition for interviewers. Don’t adlib.)

The responses to close-ended questions must always be interpreted in light of the pre-set alternatives that were offered to respondents.

Advantages and Disadvantages of Open-Ended Questions

Advantages

Open-ended questions avoid the disadvantages of close-ended questions. They can also provide rich contextual material, often of an unexpected nature. (quotes can make report more interesting).

-avoid putting ideas in people’s heads

-can engage people

Disadvantages

Open-ended questions are easy to ask—but they are difficult to answer and still more difficult to analyze. Open-ended questions:

- take up more interviewing time and impose a heavier burden on the interviewer

- increase the possibility of interviewer bias if the interviewer ends up paraphrasing the responses

- require more processing

- increase the possibility of researcher bias since the responses have to be coded into categories for the purpose of analysis (must reduce to a set of numbers. Introduce risk of bias. Getting others to code for intersubjectivity is time consuming and expensive.)

- the classification of responses may misrepresent the respondent’s opinion. Respondent’s have no control over how their response is used.

- transmissibility and hence replicability may be compromised by the coding operation

- respondents may give answers that are irrelevant. Solution: use open-ended in pilot study, then create close ended with answers. Some amount of info lost, less likely to overlook important alternative.

-close-ended response categories must be mutually exclusive and cover every category.

-avoid multiple answers (which is closest, comes closest to point of view)

-can have open & close-ended versions of same question, spread out in survey. Always open first.

Ordering the Questions

Question sequence is just as important as question wording. The order in which questions are asked can affect the responses that are given:

- make sure that open-ended and close-ended versions of the same question are widely separated and that the open-ended version is asked first. (sufficiently separated)

- if two questions are asked about the same topic, make sure that the first question asked will not colour responses to the subsequent question. Change order or separate questions.

- avoid posing sensitive questions too early in the questionnaire.

- begin with non-threatening questions that engage the respondent’s interest and seem related to the stated purpose of the survey. Help create rapport.

- ensure some variety in the format of the questions in order to hold the respondent’s attention.

-when reading over questionnaire, try to think how you would react. Not intimidating. Shouldn’t seem like a test

-have you unwittingly made your own views obvious and favoured a particular position?

-worded in a friendly, conversational way. Should seem natural.

-writing questions is likened to catching a particularly elusive fish.

-making assumptions that everyone understands the question the same way. The way you intended, assuming people have necessary information. Make questions unambiguous. Problem: people will express non-attitudes.

-if problems writing questions, often b/c not completely clear on topic concept. Importance of nominal definition.

Common Errors in Question Wording

‘Do you agree or disagree with the supposition that continued constitutional uncertainty will be detrimental to the Quebec economy?’

Error #1: the question uses language that may be unfamiliar to many respondents. The wording should be geared to the expected level of sophistication of the respondents.

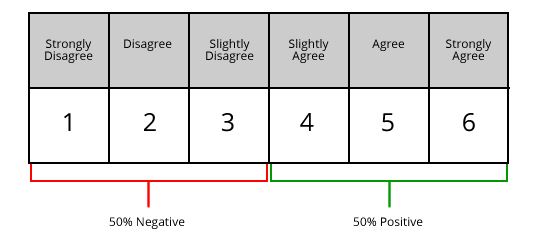

‘Please tell me whether you strongly agree, somewhat agree, somewhat disagree or strongly disagree with the following statements:

People like me have no say in what the government does

The government doesn’t care what people like me think’

Error #2: the wording of the statements is vague (the federal government? the provincial government? the municipal government?) Questions must always be worded as clearly as possible. (time, place, lvl of govt)

‘It doesn’t matter which party is in power, there isn’t much governments can do these days about basic problems’

Error #3: this is a double-barreled question. A respondent could agree with one part of the question and disagree with the other.

‘In federal politics, do you usually think of yourself as being on the left, on the right, or in the center?’

Error #4: this question assumes that the respondent understands the terminology of left and right.

‘Would you favor or oppose extending the North American Free Trade Agreement to include other countries?”’

Error #5: this question assumes that respondents are competent to answer. Also doesn’t say to what other countries. Solution: filter question: Do you happen to know what NAFTA is? People will want to answer even if they don’t know what it is (ex, fictitious topics). Lack of information.

‘Should welfare benefits be based on any relationship of economic dependency where people are living together, such as elderly siblings living together or a parent and adult child living together or should welfare benefits only be available to those who are single or married and/or have children under the age of 18 years?’

Error #6 this question is too wordy. In a self-administered survey, a question should contain no more than 20 words. In a face-to-face or telephone survey, it must be possible to ask the question comfortably in a single breath.

‘Do you agree that gay marriages should be legally recognized in Canada?’

Error #7: this is a leading question that encourages respondents to agree. The problem could be avoided by adding ‘or disagree. Especially important to avoid in regard to sensitive topics.

‘Canada has an obligation to see that its less fortunate citizens are given a decent standard of living’.

Error #8: this question is leading because it uses emotionally-laden language e.g. ‘less fortunate’, ‘decent’. Can also be leading by identifying with prestigious person or institution like Supreme Court, or w/someone who is disliked.

How often have you read about politics in the newspaper during the last week?

Error #9: this question is susceptible to social desirability bias because it seems to assume that the respondent has read the newspaper at least once during the previous week. People answer through filter of what makes them look good. “Have you had time to read the newspaper in the last week?”

-don’t abbreviate

-no more than 1 question per line

-open-ended must have space to write

-clear instructions

-informed consent

-privacy/confidentiality

A Checklist for Identifying Problems in the Pre-Test

- Did close-ended questions elicit a range of opinion or did most respondents choose the same response category?

- Do the responses tell you what you need to know?

- Did most respondents choose ‘agree’ (the question was too bland -> should protect nature) or did most respondents choose ‘disagree’ (the question was too strongly worded -> abortion is murder)?

- Did respondents have problems understanding a question? Were there a lot of don’t knows? (if they don’t get it, ask it again and move on)

- Did several respondents refuse to answer the same question?

- Did open-ended questions elicit too many irrelevant answers? (can you code responses)

- Did open-ended questions produce yes/no or very brief responses? Add a probe. (best probe is silence, pen poised to record)

Topic 9: Content Analysis

Overview:

What is content analysis?

What can we analyze?

What questions can we answer?

Selecting the communications

Substantive content analysis

Substantive content analysis: coding manifest content

Substantive content analysis: coding latent content

Structural content analysis

Strengths of content analysis

Weaknesses of content analysis

What is content analysis?

-involves the analysis of any form of communication

-communications form the basis for drawing inferences about causal relations

-Content analysis is ‘any technique for making inferences by systematically and objectively identifying specified characteristics of communications’. (Holsti)

-Systematically means that content is included or excluded according to consistently applied criteria.

-Objectively requires that the identification be based on explicit rules. The categories used for coding content must be defined clearly enough and precisely enough that another researcher could apply them to the same content and obtain the same results

(transmissibility+replicability=intersubjectivity).

What can we analyze?

Content analysis can be performed on virtually any form of communication (books, magazines, poems, songs, speeches, diplomatic exchanges, videos, paintings…) provided:

- there is a physical record of the communication.

- the researcher can obtain access to that record

A content analysis can focus on one or more of the following questions: ‘who says what, to whom, why, how, and with what effect?’ (Lasswell)

-who/why: inferences about sender of the communication, causes or antecedents. Why does it take the form that it does?

-with what effect: inferences about effects on person(s) who receives it

What questions can we answer?

Content analysis can be used to:

- test hypotheses about the characteristics or attributes of the communications themselves (what? how?)

- make inferences about the communicator and/or the causes or antecedents of the communication (who? why?)

- make inferences about the effect of the communication on the recipient(s) (with what effect?)

Rules of Content analysis

i.specify rules for selecting communications that will be analyzed

- specify characteristics you will analyze (what aspects of content)

iii. formulate rules for identifying characteristics when they appear

- apply the coding scheme to the selected communications

Selecting the communications

The first step is to define the universe of communications to be analyzed by defining criteria for inclusion.

Typical criteria include:

- the type of communication

- the location, frequency, minimum size or length of the communication

- the distribution of the communication

- the time period

- the parties to the communication (if communication is two-way or multi-way)

If too many communications meet the specified criteria, a sampling plan must be specified in order to make a representative selection.

-if study is comparative, must choose comparable communications. Control in content analysis is the way communications are chosen (as similar as possible except one thing).

Type of Analysis (substantive vs structural)

Substantive content analysis

-In a substantive content analysis, the focus is on the substantive content of the communication—what has been said or written.

-A substantive content analysis is essentially a coding operation.

-The researcher codes—or classifies—the content of the selected communications according to a pre-defined conceptual framework

Examples:

- coding newspapers editorials according to their ideological leaning

- coding campaign coverage according to whether it deals with matters of style or substance

Substantive Content Analysis: Coding Manifest Content

-A substantive content analysis can involve coding manifest content and/or latent content

-Coding manifest content means coding the visible surface content i.e. the objectively identifiable characteristics of the communication

-list of words/phrases that are empirical counterparts to your concept (the hard part!)

-important to relate it to some sort of base -> longer means more likely to use particular words

-Example: choosing certain words or phrases as indicators of the values of key concepts and then simply counting how often those words or phrases occur within each communication.

Advantages:

- Ease

- Replicability

- Reliability (consistency)

Intersubjectivity?

Disadvantages

- meaning depends on context

- loss of nuance and sublety of meaning

-possible that word is being used in an unexpected way (irony, sarcasm)

-validity: are we really measuring what we think we’re measuring?

Substantive Content Analysis: Coding Latent Content

Coding latent content involves coding the underlying meaning. (tone of media, etc)

Example:

- reading an entire newspaper editorial and making a judgment as to its overall ideological leaning.

reading an entire newspaper story and making a judgment as to whether the person covered is reflected in a positive, negative, or neutral light.

Advantages

(1) less loss of meaning and thus higher validity.

Disadvantages

(1) requires the researcher to make judgments and infer meaning, thus increasing risk of bias.

(2) lower reliability.-> differences in judgment

(3) lower transmissibility and hence replicability. -> cannot communicate to a reader exactly how judgement was made

-researcher is making judgments about meaning, which may be influenced by own values

Solution: take 1 hypothesis & test it different ways. More compelling, more experience w/ pros and cons of content analysis. Test hypothesis as many ways as possible.

-strive for high intercoder reliability (2 people recode independently, 90% similarity)

-use all 3 methods

Structural Content Analysis

A structural content analysis focuses on physical measurement of content.(time, space)

Examples:

- how much space does a newspaper accord a given issue (number of columns, number of paragraphs, etc.)?

- how much prominence does a newspaper accord a given issue (size of headline, placement in the newspaper, presence of a photograph, etc.)?

- how many minutes does a news broadcast give to stories about each political party?

- Column inches, seconds of airtime, order of stories, pages, paragraphs, size of headline, photograph= measures of prominence

Measurements of space and time must always be related to the total size/length of the communication

-standardize: relative to size w/same paper, not compare headline size in 2 papers

Advantages

- reliability

- replicability -easy to explain methods

Disadvantages

- loss of nuance & subtlety of meaning

-less valid: can you really represent subtle nuanced ideas by counting/measuring?

Strengths of Content Analysis

-economy

-generalizability (external validity). Representative, more confidence.

-safety: risk of missing something, time, etc not existant here. You can recode.

-ability to study historical events or political actors: asking people means you get answers they think now, not what they thought then

-ability to study inaccessibly political actors (supreme court justices)

-unobtrusive (non-reactive)

-reliability: highly reliable way of doing research, consistent results (structural, manifest)

-few ethical dilemmas. Communications already been produced, won’t harm or embarrass people.

Weaknesses of content analysis

-requires a physical record of communication

-need access to communications

-loss of meaning (low validity): are we measuring what we think we’re measuring?

-risky to infer motivations—political actors do not necessarily mean what they write or say. (Take into account purpose of communication if asking why)

-laborious and tedious

-subjective bias -> important elements of subjectivity (latent analysis: making judgements, inferences about meaning)

-> no one best way of doing content analysis. Do all 3.

Major Coding Categories

-warfare: a battle royal, political equivalent of heat seeking missiles, fighting a war on several fronts, a night of political skirmishes, took a torpedo in the boilers, master of the blindside attack

-general violence: a goold old-fashioned free-for-all, one hell of a fight, assailants in the alley

-sports and games: contestants squared off, left on the mat, knockout blow

-theatre and showbiz: a dress rehearsal, got equal billing, put their figures in the spotlight

-natural phenomena: nothing earth-shattering, an avalanche of opinion

-other

Coding Statements

-descriptive: present the who, what, where, when, without any meaningful qualification or elaboration

-analytical: draw inferences or reach conclusions (typically about the causes of the behaviour or event) based on fact not observed

-evaluative: make judgments about how well the person being reported on performed

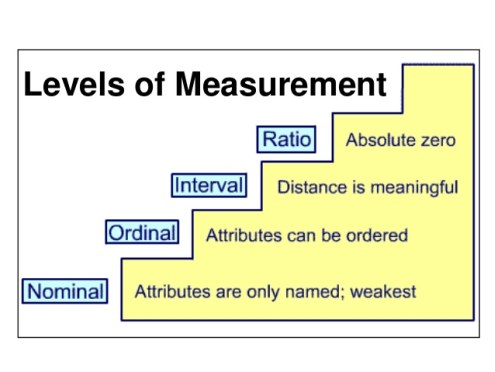

Topic 10: Measurement

Overview:

What is measurement?

Rules and levels of measurement

Nominal-level measurement

Ordinal-level measurement

Interval-level measurement

Ratio-level measurement

What is Measurement?

-foundation of statistics

Measurement is the process of assigning numerals to observations according to rules.

These numerals are referred to as the values of the variable we are measuring (not numbers, but numberals, simply symbols or labels whereas numbers have quantitative meaning).

Measurement can be qualitative or quantitative.

If we want to measure something, we have to make up a set of rules that specify how the numerals are to be assigned to our observations.

Rules and Levels of Measurement

-The rules determine the level, or quality, of measurement achieved. <- most important part of definition.

-The level of measurement determines what kinds of statistical tests can be performed on the resulting data.

-The level of measurement that can be achieved depends on:

- the nature of the property being measured

- the choice of data collection procedures

-The general rule is to aim for the highest possible level of measurement because higher levels of measurement enable us to perform more powerful and more varied tests.

-The rules can provide a basis for classifying, ordering or quantifying our observations.

-no hierarchical order, can substitute any numeral for any other numeral. All they indicate is that the categories are different.

4 Levels: NOIR

Nominal-level measurement

Ordinal-level measurement

Interval-level measurement

Ratio-level measurement

Nominal-level measurement

-Nominal-level measurement represents the lowest level of measurement, most primitive, least information

-Nominal measurement involves classifying a variable into two or more (predefined) categories and then sorting our observations into the appropriate category.

-The numerals simply serve to label the categories. They have no quantitative meaning. Words or symbols could perform the same function. There is no hierarchy among the categories and the categories cannot be related to one another numerically. The categories are interchangeable.

-classify

-Rule: do not assign the same numeral to different categories or different numerals to the same category. The categories must be exhaustive and mutually exclusive.

Ex) sex, religion, ethnic origin, language

Ordinal-Level Measurement

-Ordinal-level measurement involves classifying a variable into a set of ordered categories and then sorting our observations into the appropriate category according to whether they have more or less of the property being measured. Allows ordering and classifying. Notion of hierarchy.

-The categories stand in a hierarchical relationship to one another and the numerals serve to indicate the order of the categories. Numerals stand for relative amount of the property.

-classify, order

-more useful, direction of relation btwn variables

-With ordinal-level measurement, we can say only that one observation has more of the property than another. We can not say how much more.

Ex) social class, strength of party loyalty, interest in politics

Interval-Level Measurement

-Interval-level measurement involves classifying a variable into a set of ordered categories that have an equal interval (fixed and known interval) between them and then sorting our observations into the appropriate category according to how much of the property they possess.

-There is a fixed and known interval (or distance) between each category and the numerals have quantitative meaning. They indicate how much of the property each observation has (actual amount).

-Classify, order, meaningful distances.

-With interval-level measurement, we can say not only that one observation has more of the property than another, we can also say how much more.

-BUT we cannot say that one observation has twice as much of the property than another observation. Zero is arbitrary.

Ex) celcius and farenheit scales of temperature

Ratio-Level Measurement (highest)

-The only difference between ratio-level measurement and interval-level measurement is the presence of a non-arbitrary zero point.

-A non-arbitrary zero point means that zero indicates the absence of the property being measured.

-Now we can say that one observation has twice as much of the property as another observation.

-Any property than can be represented by counting can be measured at the ratio-level.

-classify, order, meaningful distance, non-arbitrary zero

Ex) income, years of schooling, gross national product, number of alliances, turnout to vote

-in poli sci, few things are above the ordinal level. Stretches credulity to believe that we could come up with equal units of collectivism or alienation.

-anything that can be measured at a higher lvl can be measured at a lower lvl

-always try to achieve highest lvl of measurement. Constrained by technique used to collect data.

Topic 11: Statistics: Describing Variables

Overview:

Descriptive versus inferential statistics

Univariate, bivariate and multivariate statistics

Univariate descriptive statistics

Describing a distribution

Measuring central tendency

Measuring dispersion

Descriptive versus Inferential Statistics

Descriptive statistics are used to describe characteristics of a population or a sample.

Inferential statistics are used to generalize from a sample to the population from which the sample was drawn. They are called ‘inferential’ because they involve using a sample to make inferences about the population.

Univariate, Bivariate and Multivariate Statistics

Univariate statistics are used when we want to describe (descriptive) or make inferences about (inferential) the values of a single variable.

Bivariate statistics are used when we want to describe (descriptive) or make inferences about (inferential) the relationship between the values of two variables.

Multivariate statistics are used when we want to describe (descriptive) or make inferences about (inferential) the relationship among the values of three or more variables.

-can all be descriptive or inferential

Univariate Descriptive Statistics

Data analysis begins by describing three characteristics of each variable under study:

- the distribution : how many cases take each value?

- the central tendency: which is the most typical value? best represents a typical case

- the dispersion: how much do values vary? how spread out are cases across the possible categories? If there is much dispersion, measure of central tendency may be misleading.

-frequency value tells us how many cases take each of the possible values. Records the frequency with which each possible value occurs.

Describing a Distribution I

Knowing how the observations are distributed across the various possible values of the variable is important because many statistical procedures make assumptions about the distribution. If those assumptions are not met, the procedure is not appropriate.

A frequency distribution is simply a list of the number of observations in each category of the variable. It is called a frequency distribution because it displays the frequency with which each possible value occurs.

-frequency value tells us how many cases take each of the possible values. Records the frequency with which each possible value occurs.

Describing a distribution:

Raw frequencies (how many cases took off diff possible values)

-title informative, tell us variable for which data is being presented. Not interpret table

-source: name source

-footnote

-totals are difficult to compare, translate into %

-gives a relative idea of what to expect in the rest of the population

-gives a consistent base to make comparisons

-never report % w/out also reporting total # of cases in survey. Makes data meaningful.

– no % w/fewer than 20 cases: present raw frequency

-if data come from a sample, round off percentages to the nearest whole number, should assume that there is error.

-round up to .6-.9. round down .1-.4. with 0.5, round to nearest even number.

-99, 100, and 101% are acceptable totals. Can add note saying that numbers may not add up to 100.

-present in form of graph or chart. Contains exact same info, but easier to visualize. More interpretable, more appealing. Pie-chart, line graph.

-tricks: truncated scale to make things look better/worse. Always check the scaling.

-need to check distribution to make sure that its appropriate to use a particular statistic

Interval/ratio: not simply numerals, but numbers w/quantitative meanings. Can’t use bar or pie chart. To present distribution, must collapse lvls of variables into small groups.

-guidelines: 1. At least 6, but no more than 20 intervals. Lose to much info about distribution if too small, but more than 20 defeats the purpose of creating class intervals & data is not readily accessible.

- intervals must all have same width, encompass same # of values to be comparable (can have larger open-ended category at the end)

- don’t want them to be too wide. Want to be able to consider every case within a given interval to be similar, makes sense to treat cases within the interval as the same.

- must be exhaustive and mutually exclusive.

Describing a distribution: interval lvl data

-create a line graph.

-the only pts w. any info are the dots. Connect to remind reader that original distribution was continuous.

,relative frequencies, bar charts, pie-chart, interval level data,

Central Tendency versus Dispersion

A measure of central tendency indicates the most typical value, the one value that best represents the entire distribution

A measure of dispersion tells us just how typical that value really is by indicating the extent to which observations are concentrated in a few categories of the variable or spread out among all of the categories.

-evaluating central tendency. Important for evaluating sample size. Don’t want to only describe variables (see if covary in predicted ways)

-2 distributions could have similar central tendency, but be very different. Use more than one measure.

A measure of dispersion tells us how much the values of the variable vary. Knowing the amount of dispersion is important because:

- the appropriate sample size is highly dependent on the amount of variation in the population. The greater the variation, the larger the sample will need to be.

- we cannot measure covariation unless both variables do vary.

Measuring Central Tendency and Dispersion (Nominal-Level)

The mode is the most frequently occurring value—the category of the variable that contains the greatest number of cases. The only operation required is counting.

The proportion of cases that do not fall in the modal category tells us just how typical the modal value is. This is what Mannheim and Rich call the variation ratio.

-bimodal distribution: 2 are tied for most cases

V= f nonmodal

N

-dispersion: wht % of people were not in the modal category. The proportion who do not fall in the modal category tells us how typical the modal value is. Manheim and Rich call: variation ratio -> the lower the variation ratio, the more typical and meaningful the mode.

– in the case of bimodal or multimodal cases, select on mode arbitrarily.

Measuring Central Tendency and Dispersion (Ordinal-Level) I

Central Tendency:

-always present categories in order, natural order, should retain it

-central tendency based on order or relative position

The median is the value taken by the middle case in a distribution. It has the same number of cases above and below it. If even # of cases, take average of the two middle cases.

-cumulative frequency: eliminating raw frequency, tells # of cases that took that value or lower.

Dispersion:

The range simply indicates the highest and lowest values taken by the cases. Problem: could overstate variability. Range doesn’t tell us anything about how things are distributed btwn points.

The inter-quantile range is the range of values taken by the middle 50 percent of cases—inter-quantile because the endpoints are a quantile above and below the median value.

Measuring Central Tendency (Interval and Ratio-Level) I

The measure of central tendency for interval- and ratio-level data is the mean (or average value). Simply sum the values and divide by the number of cases:

Fall term grades: 70 75 78 82 85

GPA (or mean grade) = 78

-The mean is the preferred measure of central tendency because it takes into account the distance (or intervals) between cases. The fact that there are fixed and known intervals between values enables us to add and divide the values.

-The mean is sensitive to the presence of a small number of cases with extreme values:

When an interval-level distribution has a few cases with extreme values, the median should be used instead.

- The mean is sensitive to the presence of a small number of cases with extreme values: 26,000. 28,000. 29,000. 32,000, 34,000, 36,000: mean = 31,000 median=32,000

- Group #2 15,000. 18,000/ 19,000/ 22,000/ 23,000/ 25,000/ 95,000 mean=31,000 median 22,000

-Because the mean is subject to distortion, the mean value should always be presented along with the appropriate measure of dispersion.

-problematic when a few values are extreme cases. Mean take account of how far each case is from the others.

Measuring Dispersion (Interval- and Ratio-level) II

The standard deviation is the appropriate measure of dispersion at the interval-level because it takes account of every value and the distance between values in determining the amount of variability.

The standard deviation will be zero if—and only if—each and every case has the same value as the mean. The more cases deviate from the mean, the larger the standard deviation will be.

We cannot use the standard deviation to compare the amount of dispersion in two distributions that use different units of measurement (e.g. dollars and years) because the standard deviation will reflect both the dispersion and the units of measurement.

N= the number of cases, Xi = the value of each individual case, X= the mean see page 264.

Calculating Standardized Scores or Z-Values

-If we want to compare the relative position of two cases on the same variable or the relative values of the same case on two different variables like annual income and years of schooling, we can standardize the values by converting them into Z-scores.

The Z score allows us to compare scores that are based on very different units of measurement (for example, age measured in number of years and height measured in inches). -Z-scores tell us the exact number of standard deviation units any particular case lies above or below the mean:

Zi = (Xi – X)/S

where Xi is the value for each case, X is the mean value and S is the standard deviation.

Example: person1 has an annual income of $80,000 and person2 has an annual income of $30,000. The mean annual income in their community is $50,000 and the standard deviation is $20,000

Z1 = ($80,000 – $50,000)/$20,000 = 1.5

Z2 = ($30,000 – $50,000)/$20,000 = – 1

Topic Twelve: Statistics — Estimating Sampling Error and Sample Size

Overview:

What is sampling error?

What are probability distributions?

Interpreting normal distributions

What is a sampling distribution?

The sampling distribution of the sample means

The central limit theorem

Estimating confidence intervals around a sample mean

Estimating sample size—means

Estimating confidence intervals around a sample proportion

Estimating sample size–proportions

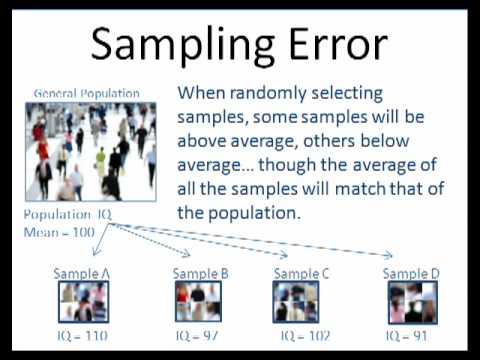

What is sampling error?

No matter how carefully a sample is selected, there is always the possibility of sampling error (i.e. some discrepancy between our sample value and the true population value).